REGISTRO DOI: 10.69849/revistaft/ni10202212311427

Rafael Carvalho Turatti

Abstract

Artificial Intelligence (AI) holds immense potential to transform various sectors such as healthcare, education, and finance, offering significant advances in efficiency, personalization, and automation. However, its rapid growth brings to the forefront complex ethical issues that cannot be overlooked. The use of AI, often powered by vast amounts of data and opaque algorithms, poses significant risks in sensitive areas such as data privacy, algorithmic discrimination, and transparency in automated decision-making. Furthermore, the lack of human oversight and monitoring can lead to negative consequences, such as denial of services or discrimination in processes like credit granting. Biases in algorithms, if left unaddressed, can perpetuate social inequalities and disproportionately affect vulnerable groups. The implementation of AI must be accompanied by clear ethical guidelines and education aimed at certifying professionals involved in its development. Ethical responsibility for the application of AI should be shared between developers, businesses, governments, and professionals, with collaboration from experts across various fields, including computer science, law, and philosophy, to create frameworks that anticipate future challenges. It is crucial that principles such as transparency, fairness, accountability, privacy, and sustainability be central in the development of socially responsible AI technologies. The implementation of dynamic policies and collaboration between academic institutions, businesses, and governments are essential to ensure the ethical use of AI. Only through a coordinated approach that balances innovation with responsibility can we mitigate AI risks and ensure its benefits for social and environmental well-being.

Keywords: Artificial Intelligence, Ethics in AI, Algorithmic Discrimination, Data Privacy, AI Governance.

Artificial Intelligence (AI) has been transforming various sectors, including healthcare, finance, and education, bringing both significant advancements and critical ethical concerns. Key challenges associated with AI include transparency, algorithmic bias, privacy issues, and accountability, all of which demand clear guidelines to ensure its responsible and secure use.

In healthcare, AI applications are improving diagnosis accuracy, personalizing treatments, and optimizing hospital operations. However, concerns over patient data privacy are paramount, as systems handling sensitive information must be safeguarded against breaches and misuse. Additionally, algorithmic bias poses risks of unequal treatment among diverse populations, emphasizing the need for continuous auditing and diverse data sources. Another challenge is accountability in the event of errors, as automated decisions raise complex questions about the responsibility of developers, healthcare professionals, and the system itself.

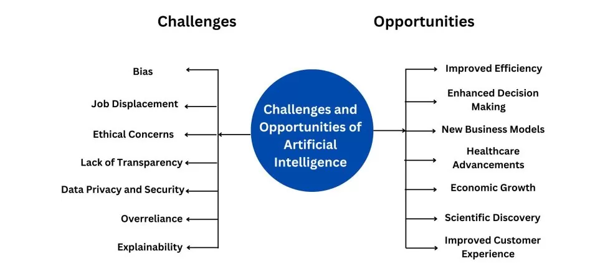

Figure 1: Challenges and Opportunities of AI.

The financial sector has seen widespread use of AI for risk analysis, fraud detection, and investment automation. However, the opacity of AI algorithms makes it difficult to understand the criteria behind credit approvals and financial product pricing. These opaque models can perpetuate inequalities, leading to discriminatory practices such as unfair loan denials or higher interest rates based on biased historical data. Moreover, the responsible collection and processing of financial data are essential to protect individuals’ privacy and avoid misuse.

In education, AI has enhanced personalized learning, tailoring content to individual student needs. However, AI cannot replace human interaction between students and teachers, making it vital that technology supplements traditional educational methods. A major concern is equitable access to technology, as only well-funded schools can fully benefit from AI advancements, potentially exacerbating educational inequalities. Additionally, platforms that collect data on student performance must ensure this information is protected and used only with proper consent.

To mitigate the risks and ensure ethical AI development, several guidelines must be adhered to. These include ensuring algorithm transparency and explainability, prioritizing bias mitigation through regular audits, safeguarding data privacy, and maintaining human oversight in critical decisions made by AI systems. It is also important to promote equitable access to AI technologies to ensure fair distribution of benefits across society.

AI ethics remains an ongoing challenge, requiring collaboration among governments, corporations, and civil society. Only through clear guidelines, transparency, and commitment to equity can AI be used responsibly and beneficially for all.

The study by Hagendorff (2019) explores the progress in AI research, development, and applications, focusing on the ethical debate surrounding these advancements. The study compares 22 ethical guidelines for AI, identifying common principles and gaps within the field, and evaluates the practical implementation of these guidelines in AI research and applications.

Jobin, Ienca, and Vayena (2019) examine the growing body of ethical guidelines produced by various organizations over the past five years. They identify five core principles—transparency, justice, non-maleficence, responsibility, and privacy—and analyze the differences in their interpretation and application, underscoring the need for deeper ethical analysis and effective implementation.

Siau and Wang (2020) discuss AI’s advancements in areas like facial recognition, medical diagnosis, and autonomous vehicles, while highlighting the ethical and moral issues that remain in the field. They differentiate between “ethics of AI” and “ethical AI,” emphasizing the importance of understanding and addressing these issues to develop technologies aligned with ethical standards.

Larsson (2020) adopts a socio-legal perspective to analyze the use of ethical guidelines in AI governance, particularly in the European Union. The study highlights challenges in aligning technological progress with legal frameworks and the need for a multidisciplinary approach to AI governance.

Khan et al. (2021) conduct a systematic literature review to identify ethical principles and challenges in AI, revealing a global consensus on key principles such as transparency, privacy, and accountability, while also identifying barriers to the adoption of these principles.

Christoforaki and Beyan (2022) review the rise of data-driven AI applications and their ethical implications. The study provides an overview of AI ethics, examining the moral issues related to large datasets, non-symbolic algorithms, and accountability, and concludes by emphasizing the importance of responsibility and sustainability in AI development, as well as the need for professional education to prevent unethical choices.

In conclusion, artificial intelligence (AI) offers vast potential to transform a wide range of sectors, including healthcare, education, finance, and others, promising significant advances in efficiency, personalization, and automation. However, its rapid growth and the widespread dissemination of data-driven applications raise profound ethical issues that cannot be ignored. The use of AI, often fueled by large volumes of data and opaque algorithms, presents significant risks in sensitive areas such as data privacy, algorithmic discrimination, transparency in automated decisions, and accountability in the event of system failures. Concerns regarding data security and privacy protection become even more intense when considering the vast amount of personal information being processed without proper oversight.

Additionally, biases in algorithms, if not carefully monitored and corrected, can perpetuate or even amplify social inequalities, disproportionately affecting vulnerable and marginalized groups. The implementation of AI without proper human oversight and ethical consideration can lead to automated decisions that have significant consequences on people’s lives, such as the denial of services or discrimination in processes like credit granting and hiring. Thus, the responsibility for the ethical application of AI needs to be shared among developers, professionals, companies, and governments, and should involve both the creation of clear ethical guidelines and the education and certification of professionals involved in AI development.

Therefore, it is essential that AI ethics be addressed in a multidisciplinary manner, bringing together experts from various fields, including computer science, law, sociology, and philosophy, to create frameworks that not only guide practice but also anticipate future challenges. Implementing principles such as transparency, equity, accountability, privacy, and sustainability should be central to the development of AI technologies that are socially responsible and effective. Promoting ethical AI also requires constant reflection on the long-term implications of these technologies, ensuring that benefits are widely distributed and that costs, such as environmental impacts, are minimized.

With the rapid evolution of AI, it is crucial that policies and regulations keep pace with this progress in a dynamic manner, adapting to new realities and emerging challenges. Collaboration among different social actors, including academic institutions, private companies, government bodies, and civil society organizations, will be key to developing effective governance that ensures AI is used responsibly, fairly, and for the benefit of society as a whole. Only through a coordinated approach, balancing innovation with responsibility, can we harness the potential of AI while mitigating its risks and ensuring that it contributes positively to human, social, and environmental well-being.

References

Christoforaki, M., & Beyan, O. (2022). AI Ethics—A Bird’s Eye View. Applied Sciences. https://doi.org/10.3390/app12094130.

Hagendorff, T. (2019). The Ethics of AI Ethics: An Evaluation of Guidelines. Minds and Machines, 30, 99 – 120. https://doi.org/10.1007/s11023-020-09517-8.

Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1, 389 – 399. https://doi.org/10.1038/s42256-019-0088-2.

Khan, A., Badshah, S., Liang, P., Khan, B., Waseem, M., Niazi, M., & Akbar, M. (2021). Ethics of AI: A Systematic Literature Review of Principles and Challenges. Proceedings of the 26th International Conference on Evaluation and Assessment in Software Engineering. https://doi.org/10.1145/3530019.3531329.

Larsson, S. (2020). On the Governance of Artificial Intelligence through Ethics Guidelines. Asian Journal of Law and Society, 7, 437 – 451. https://doi.org/10.1017/als.2020.19.

Siau, K., & Wang, W. (2020). Artificial Intelligence (AI) Ethics: Ethics of AI and Ethical AI. J. Database Manag., 31, 74-87. https://doi.org/10.4018/jdm.2020040105.