REGISTRO DOI: 10.5281/zenodo.10002381

Taise Grazielle da Silva Batista [1]

Thiago João Miranda Baldivieso [2]

Luiz Carlos Pacheco Rodrigues Velho [3]

Paulo Fernando Ferreira Rosa [4]

Abstract

Studies related to computer vision involving three-dimensional reconstruction are currently increasing and demonstrate the importance of the issue that is a subdomain of photogrammetry. Applications with Unmanned Aerial Vehicles (UAVs) for terrain mapping using photogrammetry to create three-dimensional environments facilitate structural analyzes and make it possible to obtain topographic information from captured land surfaces, using modern methodologies applicable in both civil and military areas. The subject is relevance due to the characteristics of photogrammetry with UAVs, they provide easy access, precision, and savings in mission time and equipment. This paper aims to develop three-dimensional reconstruction using aerial images in different environments. During the study, experiments were carried out with aircraft in external and internal environments, after the acquisition of aerial images, reconstruction was performed using specific photogrammetry software, with characteristic commercial and open-source software, followed by a qualitative evaluation of the results. Concluded with indications of improvements and continued work for research related to artificial intelligence techniques using machine learning and reinforcement learning to optimize.

Keywords: Dimensional Reconstruction. UAV. Aerophotogrammetry.

1 INTRODUCTION

With increasing demand and actual needs, the functions and performance of Unmanned Aerial Vehicles (UAVs) are continually advancing. Technological advances mainly drive the area of microprocessors, sensing, communications, and open demands in the areas of computer vision and computer graphics in the reconstruction of objects and threedimensional environments. Furthermore, applications with autonomous and semi-autonomous UAVs, characterized with total or partial independence from human operators, provide greater visibility in the image, as it is not necessary for the operator to aim the aircraft during the entire mission. Furthermore, in the scope of applications in the civil and military sectors, it has reduced operating costs and encouraged financing initiatives in the area.

The use of unmanned aerial vehicles for 3D mapping and reconstruction requires mission planning taking into account the object or environment from which the images will be taken and factors that may affect the process, such as weather, lighting, target geometry, camera calibration and the type of aircraft used. From the research carried out on the basis of the reference work, it can be seen that some parameters are not taken into account, such as position control, paying attention to observing the parameters that have an influence, and if no parameter is used, it is valid for the entire process.

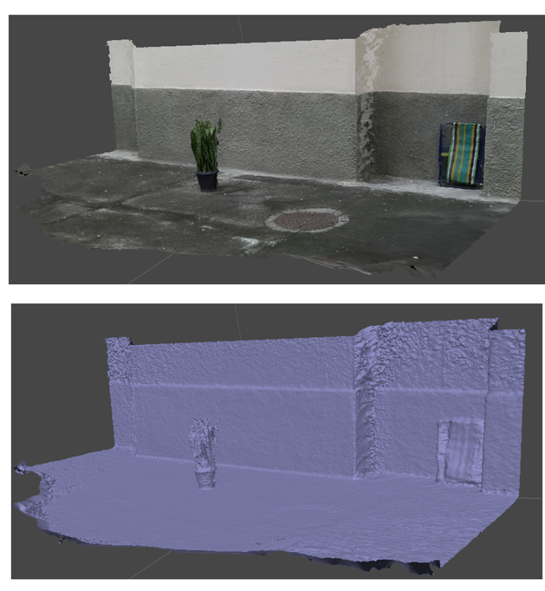

Figure 1: Mapped and reconstructed outdoor area.

Three-dimensional reconstruction is a highly researched area in computer vision and scientific visualization. Its objective is to obtain a three-dimensional geometric representation of environments or objects, making it possible to inspect details, measure properties, and reproduce them in different materials. Applications with UAVs can help in architecture, 3D cartography, robotics, augmented reality, conservation of monuments, and historical heritage [4].

There are several ways to get information related to the 3D geometry of an object, environment, or body. They can be acquired by laser scanning, photographs, sonar, tomography, and 3D sonar.

On the other hand, photo-based systems make 3D reconstructions from a single photo or with several photos at different angles, using multiple photos, which after image registration, consists of transforming different sets of data into a coordinate system.

After this step, visual reference points are defined, automatically generated by the reconstruction software, or entered manually. To establish typical visual landmarks in the scene to identify joint edges of the object to be processed in the photographs. From the processing of this information, three-dimensional geometry is obtained.

In addition, each photograph is registered by the UAV with information about the location of the Global Positioning System (GPS) sensor and the time of capture, information that is also considered in the processing to obtain the model’s georeferencing. Figure 1 presents a result of the 3D reconstruction of an external scene. The green dots are the poses of the drone’s camera.

Many have associated drones with the unmanned aircraft used in the defense industry, but as the technology continues to develop and improve, drones have gotten smaller and cheaper. Consumers can now buy their own instrument for just under $600 and such technology is already proving useful for a wide variety of purposes, including potential uses for architects such as site analysis for construction.

However, this technology could have much broader consequences, not only on the airspace above our streets, but also on the way we design to increase civil and commercial drone traffic. Just as other technologies, whether cars or surveillance systems, have shaped our urban infrastructure, so must there be an emerging network of infrastructure for pilotless technology. In the case of drones, as they become increasingly accurate and agile, opportunities arise for their use in urban areas. If these devices can be programmed to learn repeated maneuvers using cameras and sensors, it is not utopian to say that they will soon be able to learn to navigate increasingly complex vertical cities.

Various application features such as industries, space science and medicine, for example, broadly explore the areas of computational vision and image processament. Peesquisas seek new methods to solve problems and technological developments. The ultilization of images aims to extract information contained in the images and treat it in a quantitative and qualitative way. As methodologies move forward to search for computerized solutions, research aims to equip machines to process with the same human capacity and improve data and results.

In the case of Image Processing, parsing an image does not only involve the present information, besides it, is used previously stored knowledge. This is the case, for example, from applications in Remote Sensing. Sensing Specialists Remote handle a large amount of information during inference of useful image information [2].

Images are very important for surface mapping and recognition from three-dimensional modeling. The imaging set susen by presenting several considerations with not being evenly sampled or containing noise which impacts on the quality of the generated model. In this work we present the three-dimensional reconstruction from images that make the mapping of the surface of the chosen object and that can be used for recognition.

Section 2 of this work presents the methodology with some relevant concepts for understanding. Then, in section 3, some experiments that were carried out are described. Then, in section 4, the results were discussed, and finally, in section 5, the conclusion with the analysis of what was proposed.

2 METHODOLOGY

In recent years, aircraft used for terrain mapping in civil and military applications have been widely explored, especially unmanned aircraft and their use related to three-dimensional reconstruction.

Computer vision is defined as the science and technology of machines that see. In [8], the author develops theory and technology for the construction of artificial systems for obtaining information from images or any multidimensional data.

Advances in technology seek to make machines efficient with the essence of what a human is able to accomplish. Machine vision concepts were initially restricted to the construction of lenses and cameras for capturing and operating images with the characteristics of a human eye. However, in recent years, this reality has been modernized due to the growth of artificial intelligence and the application of the concept of neural networks, together with the improvement of studies on the self-progression of algorithms, known as machine learning [1]. Soon after, computer vision can be included in a sub-area of Artificial Intelligence that addresses how machines view the environment. In addition, a body of knowledge that seeks artificial vision modeling can also be defined to replicate its functions through advanced software and hardware development.

The UAVs present an ease and modernity in carrying out the activities. The methodology of obtaining images by UAVs begins with the choice of object or structure of interest. Once the object is defined, the flight around the object is accomplished by capturing the images observing the overlap, luminosity and other factors considered important.

The generated images are processed in the reconstruction tools for model generation. Each tool has characteristic processing, but generally the methodology is based on the set of images as input data, these images are refined for extraction of details, a match of points is performed on all images based on these details. At the end of the match the mapped points form clouds of points and then the 3D model.

Applied 3D reconstruction software uses the Structure for Motion method [5], which uses said relative motion for the inference about the 3D geometry of the object to be reconstructed. The methodology also encompasses bundle adjustment, which initially compares the key point descriptors identified in the images to determine between two or more similar images. Then a procedure optimization is performed to infer the camera positions for the collection of images.

Structure From Motion (SfM) is a range imaging technique studied in machine vision and visual perception. The SfM methodology uses this relative motion to infer the 3D geometry of the object to be reconstructed. It takes into account the point trajectories of the object in the image plane and allows the determination of the 3D shape and movement that best reproduces most of the estimated trajectories. The process is similar to stereoscopic vision in that it is done to obtain two or more images of a scene from different points of view [6].

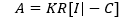

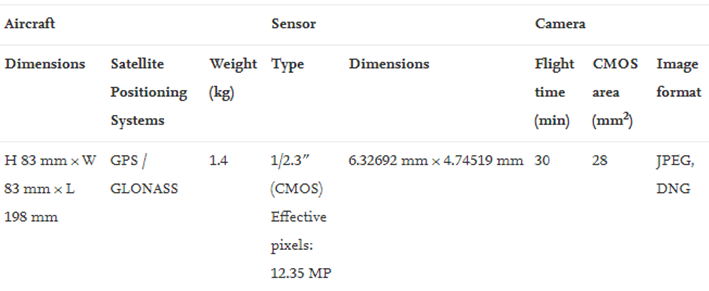

Consider a picture arrangement comprising of K pictures Ik, with K = 1,…,K. Leave Ak alone the 3 x 4 camera framework comparing to picture Ik. Utilizing the compared highlight focuses, the boundaries of a camera model Ak are assessed for each casing [5]. As displayed in Figure 2, for each element track a relating 3D item point not really set in stone, bringing about set of J 3D article focuses Pj, with j = 1,…,J, where:

Accordingly, the 2D component focuses pj,k = (px, py,1)T and 3D item focuses Pj = (px,py,pz,1)T are given inhomogeneous directions.

The camera network A can be factorized into

Figure 2: Result after structure-from-motion estimation. The projection of a 3D object point Pj in the camera image at time k gives the tracked 2D feature point Pj,k [6]

The 3 x 3 adjustment lattice K contains the inherent camera boundaries (e.g., central length or chief point offset), R is the 3 x 3 turn framework addressing the camera directly in the scene, and the camera place C depicts the situation of the camera in the scene. SFM is considered state of the art in reconstruction software because it solves camera poses and lens calibration in addition to defining geometries [5].

3D reconstruction is an old problem, there are several tools available and improve the generated model or ways to improve the process have become a focus of research with forms of reconstruction using the current AI that reinforces the use of SFM by programs. In [1] provides a comprehensive survey of recent developments in this field, works that use deep learning techniques to estimate the 3D form of generic objects. The work provides an analysis and comparison of the performance of some critical documents, summarizing some of the open problems in this field and discussing promising guidelines for future research.

3 EXPERIMENTS

To perform the experiment an object is chosen and delimited the area of acquisition of the necessary images. The flight with the remotely piloted aircraft is accomplished by making the images that will later be processed. For this work the flight was performed without a route planning, point that can be observed whether or not to apply the technique should be relevant.

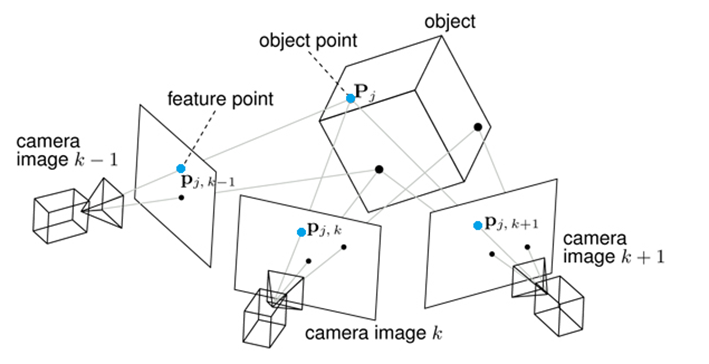

For our field test, DJI Mavic Pro was used, Figure 3 presents drone specifications. The flight time of a UAV is highly dependent on flight speed and wind speed. The drone was connected to a low-cost GPS receiver and magnetic compass. The inertial unit of measurement (IMU) was used to achieve real alignment, acceleration and barometric altitude.

Figure 3: MavicPro specs.

Several experiments have been performed, which can be seen in Table 1, external and internal experiments with different characteristics, using UAVs and also ground cameras. After the images were taken, reconstructions were performed on different software, such as PIX4D, Metashape, OpenDetailMap, and Colmap using default settings.

Table 1: Generated datasets, images and Reconstruction softwares used.

Dataset generated Acquisition Device Images PIX4D Metashape ODM RC Crystal’s valley MavicPro 337 X X X BOC 60 – High Res. MavicPro 302 X X X BOC 60 – Med. Res. MavicPro 169 X X X BOC 60 – Low Res. MavicPro 138 X X X LARC Sub-250 150 X X PIRF – Fan Scene Tello 62 X PIRF – Human Scene Tello 50 X X PIRF – Bags Scene Smartphone 217 X Object– Plant Tello 35 X X X Object – Robot Smartphone 154 X Object – Castell Smartphone 64 X X X X

BOC 60 is the new campus of the Institute of Pure and Applied Mathematics (IMPA) to be built in Jardim Botanico, in the south of the city of Rio de Janeiro.

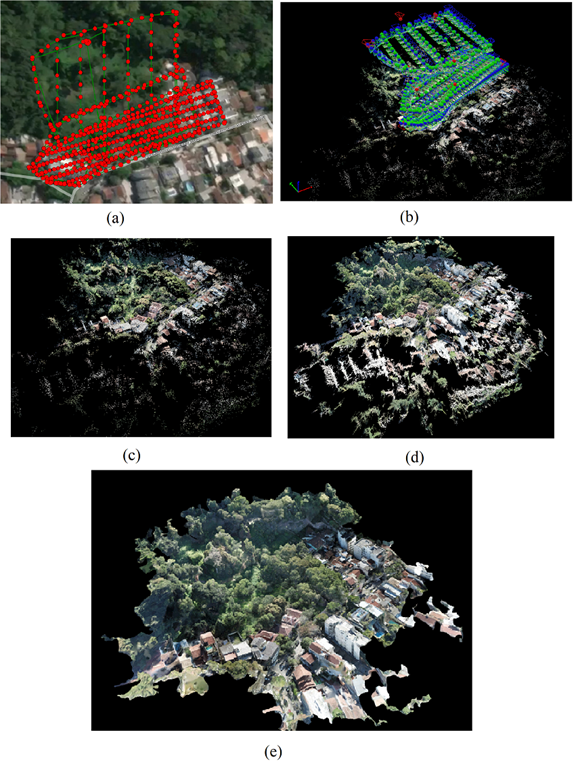

Figure 4: BOC 60 Steps to Rebuild PIX4D software; (a) Snapshot points on the map; (b) 3D image taking points; (c) Tie Points; (d) Dense cloud of points; (e) Textured 3D Model.

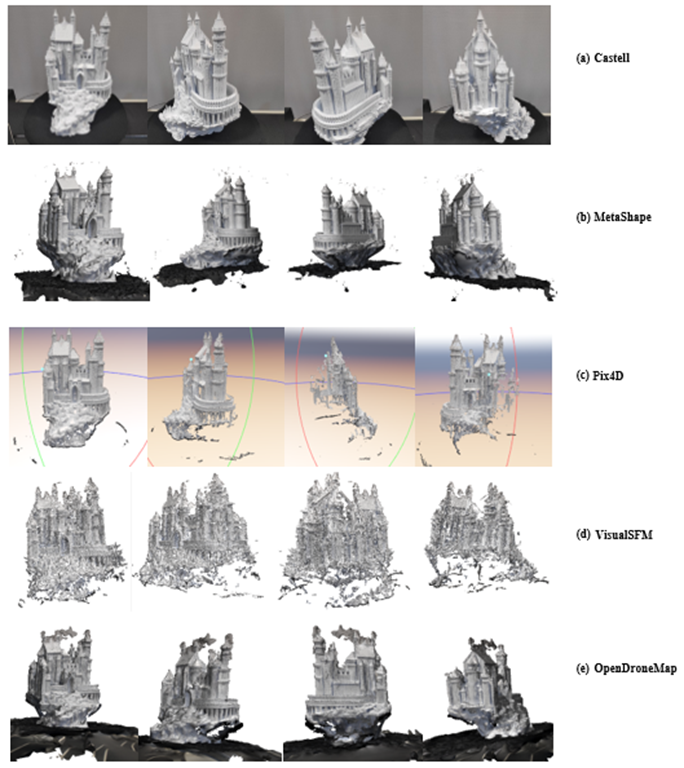

Figure 5: Visual comparison of three-dimensional reconstruction results.

In the flight test of Figure 4, it was with the help of GPS in an urban setting with different types of buildings, vegetation, and complex shapes, resulting in three-dimensional models with high processing demand when performing the image matching step. These outdoor flights were carried out in partnership with IMPA with the UAV research group of the Laboratory of Robotics and Computational Intelligence of IME to obtain images aimed at aero photogrammetry and create a dataset. More information about it is in [10].

The outdoor experiment was divided into three missions aiming to obtain a differentiated resolution, high resolution, medium resolution, and low resolution, with the variation of height and number of photos obtained.

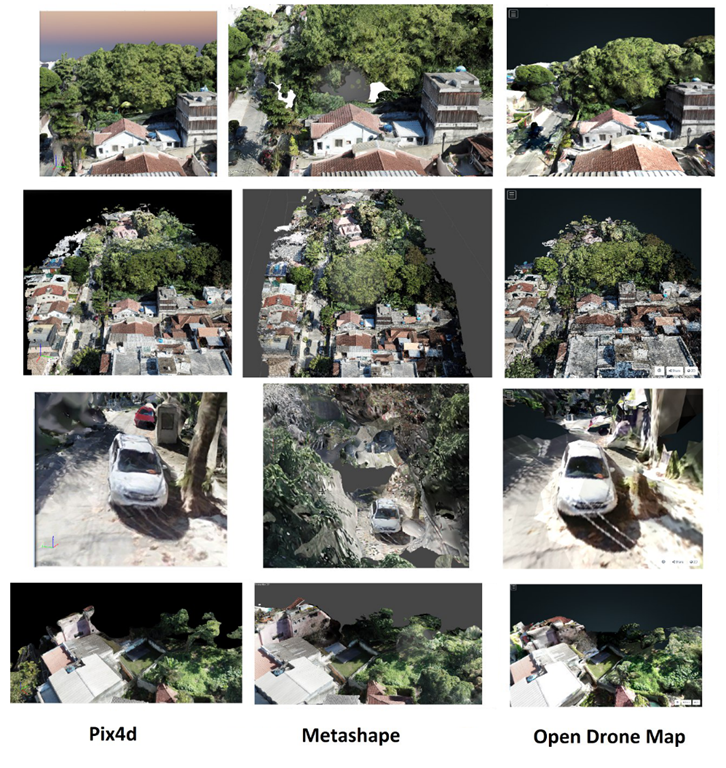

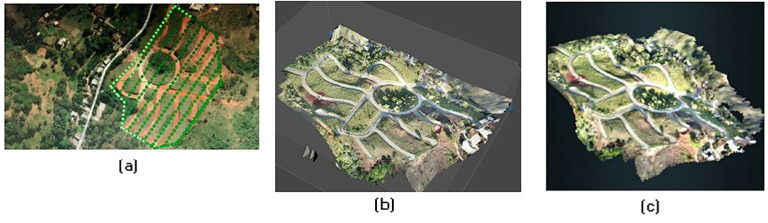

Figure 6 presents the trajectory of the experiment and the result obtained using Metashape (b) and OpenDroneMap (c).

Figure 6: Experience of mapping (a) Trajectory; (b) Metashape result; (c) OpenDroneMap result.

Next, in Figure 4 the steps for reconstruction are observed, in (a) all points of image collection by the UAV are gathered, then in (b) the camera pose is presented (position + orientation) in a three-dimensional plane; (c) shows the initial step in which the tie points are characteristic points mapped between the images; (d) are the initial points gathered clustered with neighboring points resulting in a dense cloud of points; and finally in (e) the three-dimensional object is obtained in which a mesh structure and texture connect the cloud of points is applied based on a montage of images, forming an object close to urban reality.

With the creation of the dataset of images, the next step was to reconstruct the mapped terrain. For this, three different software were used for the three-dimensional reconstruction. They are PIX4D (whose process was discussed earlier), Metashape, and OpenDroneMap. The visual comparison between results can be seen in Figure 5. The experiment has importance due to large mapped area and richness of details of outdoor scenes. Another outdoor experience can be viewed in our generated dataset [10].

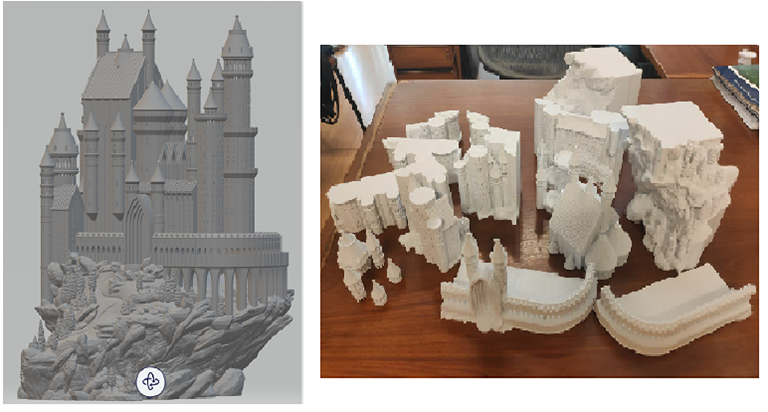

Another experiment was related to the use of a Ground Truth model of a medieval castle. First, the object was printed to obtain the physical object. The model is available from the website Thingiverse, modeled on two castles: Schloss Lichtenstein and Neuschwanstein Castle, both located in Germany. In addition, authors of the design and graphic modeling of the object make it available for download through [7]. Next, in Figure 7, you can see the file to be printed and the part that has already been printed. The model is approximately one meter high and is divided into 22 pieces, the model used in the experiment was glued by the assembly instructions, but due to deformations caused during printing, some parts need finishing and painting to be as close as possible the virtual model.

Figure 7: Medieval Castle experiment, 3D file visualization, printed parts and image of dataset.

Figure 8: Medieval Castle experiment, reconstruction using different tools.

Figure 8 shows the comparison of the result of different tools for the same image set. Each tool presents a particular aspect to accomplish the reconstruction process. Some focus directly on the object and smooth out the rest of the information, others look for the details that feature the most prominence in the image and smooth out the minor details. Thus, some representations show the whole Castle better solidified and the base somewhat less represented, in others the model presents both the Castle and the base and elements that make up the image.

The experiment with the medieval castle printed in 3D was carried out in order to generate a reconstructed model to later compare with a new experiment to be carried out using a UAV on an indoor scene. In this partial experiment, the acquisition of the image collection was performed using a smartphone camera. Soon after obtaining the images, processing in three-dimensional reconstruction software was performed. With these results and the following experiments, the aim is to apply reinforcement machine learning algorithms to optimize the generated three-dimensional models.

Figure 9: Monument to the Heroes of Laguna and Golden

The Monument to the Heroes of Laguna and Dourados Figure 9, is located in General Tibúrcio Square, on the Red Beach, Urca neighborhood, in the city and state of Rio de Janeiro, Brazil. It honors the episodes of the Retreat of the Lagoon and the Battle of Gold, which took place in the context of the Triple Alliance War. The monument has a circumference of 53 meters, based on white granite. The total height of the monument is 15 meters, presenting one of the most imposing sculptural sets in the city.

Besides the historical features, the monument presents richness of detail in its varied composition of material and shapes, with arrangements in circumference, squares, statues in natural size, pedestals, finishes in granite, bronze among others, which provide the analysis of the representation of the model generated with the real object. Completing the work, an underground crypt, nine steps below the monument, guards the ashes of the heroes of Laguna and Golden.

Figure 10: Experiment Monument to the Heroes of Laguna and Golden.

For the experiment after the acquisition of the images, through the flight with the remotely piloted aircraft, the assembly was processed by three-dimensional reconstruction applications for model generation. The images were captured under natural light on a sunny day, one can observe some interference of the luminosity in the result. The incidence of light is a factor linked to the quality of the model generated by indicating occlusions that inside the tools are treated by smoothing the edges and lines not interpreted completely, so the final model is not reconstructed correctly.

You can see in Figure 9 the comparison of the generated model with an image of the generated set. The parts where the incidence of the sun has made the representation of details in the images low appear as occlusion in the model. The faults appear at points where the information obtained from the images is not sufficient for good reconstruction, the tools bundle these parts and the result of the model is not as expected.

For these and other reasons, optimization is an element to be considered to refine the model, the deep learning process can be applied to the image sets for pre-processing and/or the final model in post-processing for a good result.

4 DISCUSSION

With the particularities of each tool, the clouds of generated points present their differences, and it becomes interesting to compare them for analysis of the results.

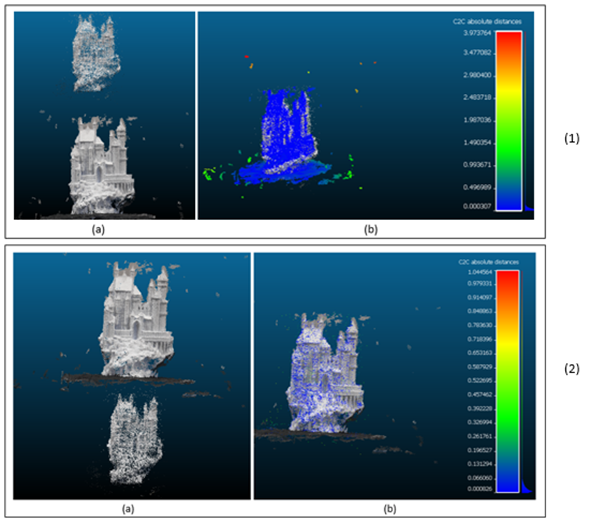

Figure 11: Comparison point cloud Metashape and OpenDroneMap. (1) Metashape reference. (2) OpenDroneMap reference. (a) Insertpoint cloud; (b) Generated heat map

CloudCompare software was used for comparison between point clouds [9]. CloudCompare is a point cloud processing tool with multiple metrics; it is an open-source and free project with a framework that provides a set of essential tools for manually editing and rendering 3D point clouds and triangular meshes.

The initial analyses were carried out from the reconstruction of the image set of the medieval castle that obtained a good result. Since not all tools make the files available to be exported, the comparison was performed with the files generated by the Metashape and OpenDroneMap tools.

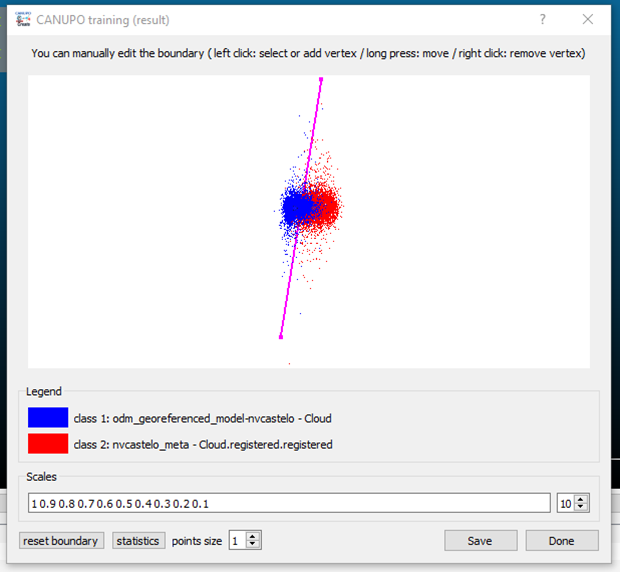

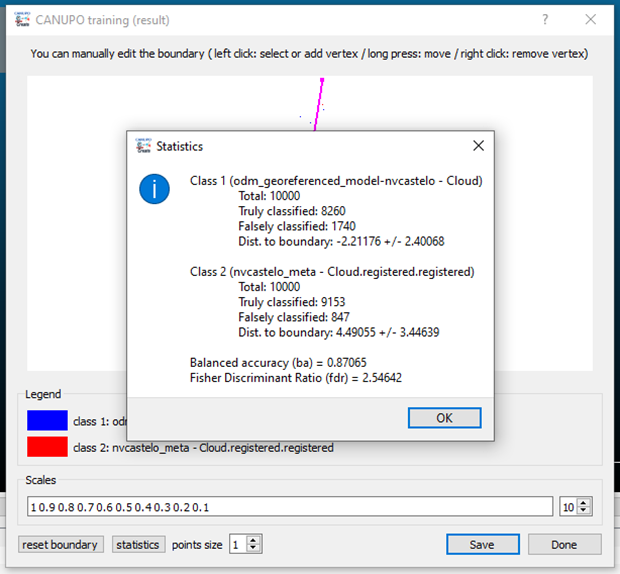

For the research, an analysis of the distance between points with heat cloud generation was performed, the clouds generated in each tool were inserted, and the analysis was performed in two stages. The first step was using the cloud generated by Metashape as a reference cloud. The second step was using the cloud generated by OpenDroneMap as a reference cloud. The result obtained is shown in Figure 11. Figures 12 and 13 present the numerical results of the analysis within the software.

It is observed that the distance between the points of the clouds presents a significant difference, and the scale and orientation factors of each cloud must be treated with due care in the comparison. With the result generated, it is possible to qualitatively analyze the generated clouds and identify the software that presents better performance and the need for improvements through machine learning by reinforcement.

Figure 12: Análise das métricas obtidas da comparação entre OpenDroneMap e Metashape

Figure 13: Análise das métricas obtidas da comparação entre OpenDroneMap e Metashape.

5 CONCLUSION AND FUTURE WORK

Three-dimensional reconstruction techniques become accessible and various tools have emerged, both commercial and usage, and refining the model generated to improve results has become the target of research. The technology and ease of using drones has become a key point to combine reconstruction with the use of drones in the acquisition of images that are used as a basis. To improve results in an innovative way emerged artificial intelligence applications from machine learning to optimize image processing and the 3D model.

This study carried out drone flights to see the agility and ease of applications and experiments with images generated with different image sets. The contribution made by this project includes the creation of datasets with scenes and objects 3D obtained through reconstruction and images captured by drones. This data is available to the academic community and has multiple capture devices, processed by dedicated software displayed in Table 1. As work continues, it is expected to use this data for new optimization experiments with machine learning and reinforcement to improve distortions caused during image processing and increase the visible accuracy of three-dimensional models.

ACKNOWLEDGEMENT

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior – Brasil (CAPES) – Finance Code 001. This work was carried out with the support of the Cooperation Program Academic in National Defense (PROCAD-DEFESA). It is also aligned with the cooperation project between BRICS institutions related to computer vision and applications of AI techniques.

REFERENCES

[1] ______. Boldmachines, Medieval Castle, 2018. Available at: https://www.thingiverse.com/thing:862724 [Retrieved: November, 2021]

[2] ______. CloudCompare – Open Source Project. Available at: https://www.cloudcompare.org [Retrieved: November, 2021]

[3] CASELLA, E. et al. Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques. Coral Reefs, v. 36, 2017, p. 269–275.

[4] COLICA, E. et al. Using unmanned aerial vehicle photogrammetry for digital geological surveys: case study of Selmun promontory, northern of Malta. Environ Earth Sci 80, p. 551, 2021.

[5] CRAIG, I. Vision as process. Robotica, Cambridge University Press, v. 13, n. 5, 1995, p. 540.

[6] HAN, X.; LAGA, H.; BENNAMOUN, M. Image-Based 3D Object Reconstruction: State-of-the-Art and Trends in the Deep Learning Era, IEEE Transactions on Pattern Analysis and Machine Intelligence, v. 43, n. 05, 2021, p. 1578-1604.

[7] KURZ, C.; THORMAHLEN, T.; SIEDEL, H. Visual Fixation for 3D Video Stabilization. Journal of Virtual Reality and Broadcasting, p. 12, 2011.

[8] LIU, et al. Construction urban infrastrueture based on core techniques of digital photogrammetry and remote sensing, in ‘Information Technology and Applications, 2009. IFITA’ 09. International Forum om, v. 1, 2009, p. 536-539.

[9] VELHO, L. C. P. Drone Datasets. 2020. Available at: https://www.visgraf.impa.br/dds/boc60/index.html [Retrieved: November, 2021]

[10] SEITZ, S. M. et al. A Comparison and Evaluation of Multi-View Stereo Reconstruction Algorithms, 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), 2006.

[1] Department of Defense Engineering, Military Institute of Engineering – Rio de Janeiro, Brazil. e-mail: taisegbs@ime.eb.br

[2] Department of Defense Engineering, Military Institute of Engineering – Rio de Janeiro, Brazil. e-mail: thiagojmb@ime.eb.br

[3] Department of Computer Graphics, Institute for Pure and Applied Mathematics – Rio de Janeiro, Brazil. email: lvelho@impa.br

[4] Department of Defense Engineering, Military Institute of Engineering – Rio de Janeiro, Brazil. e-mail: rpaulo@ime.eb.br